News

Work

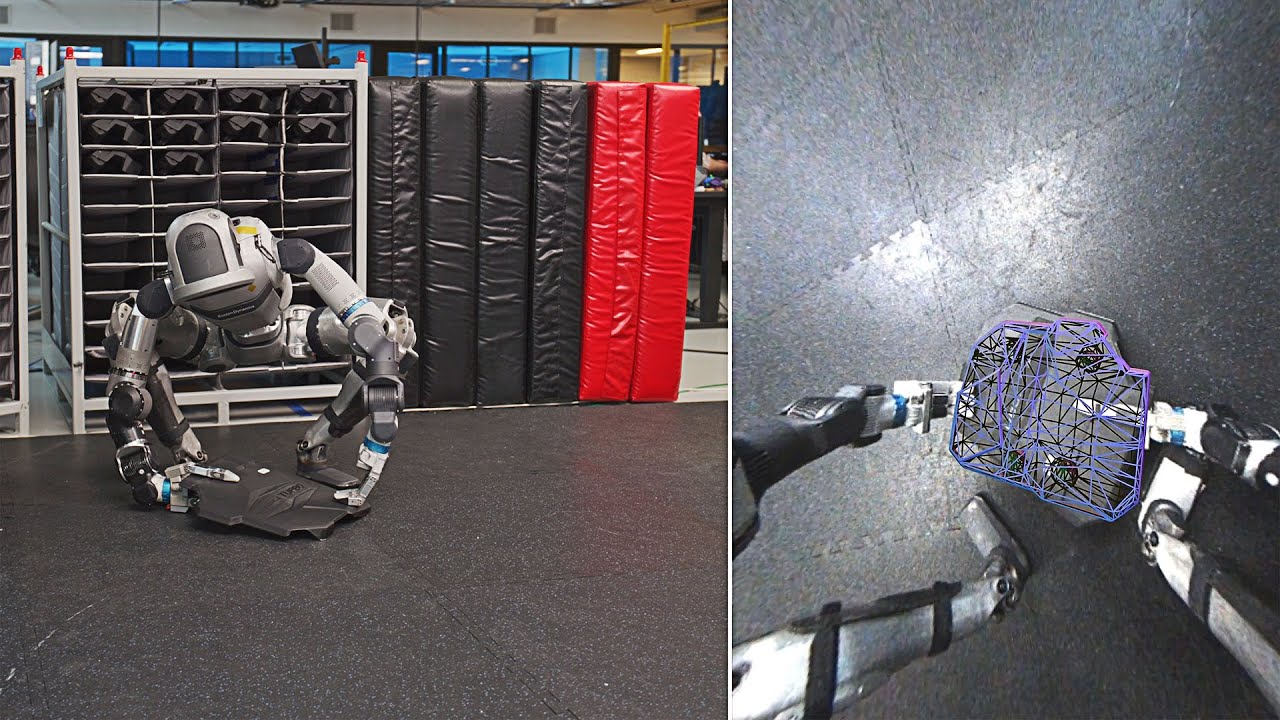

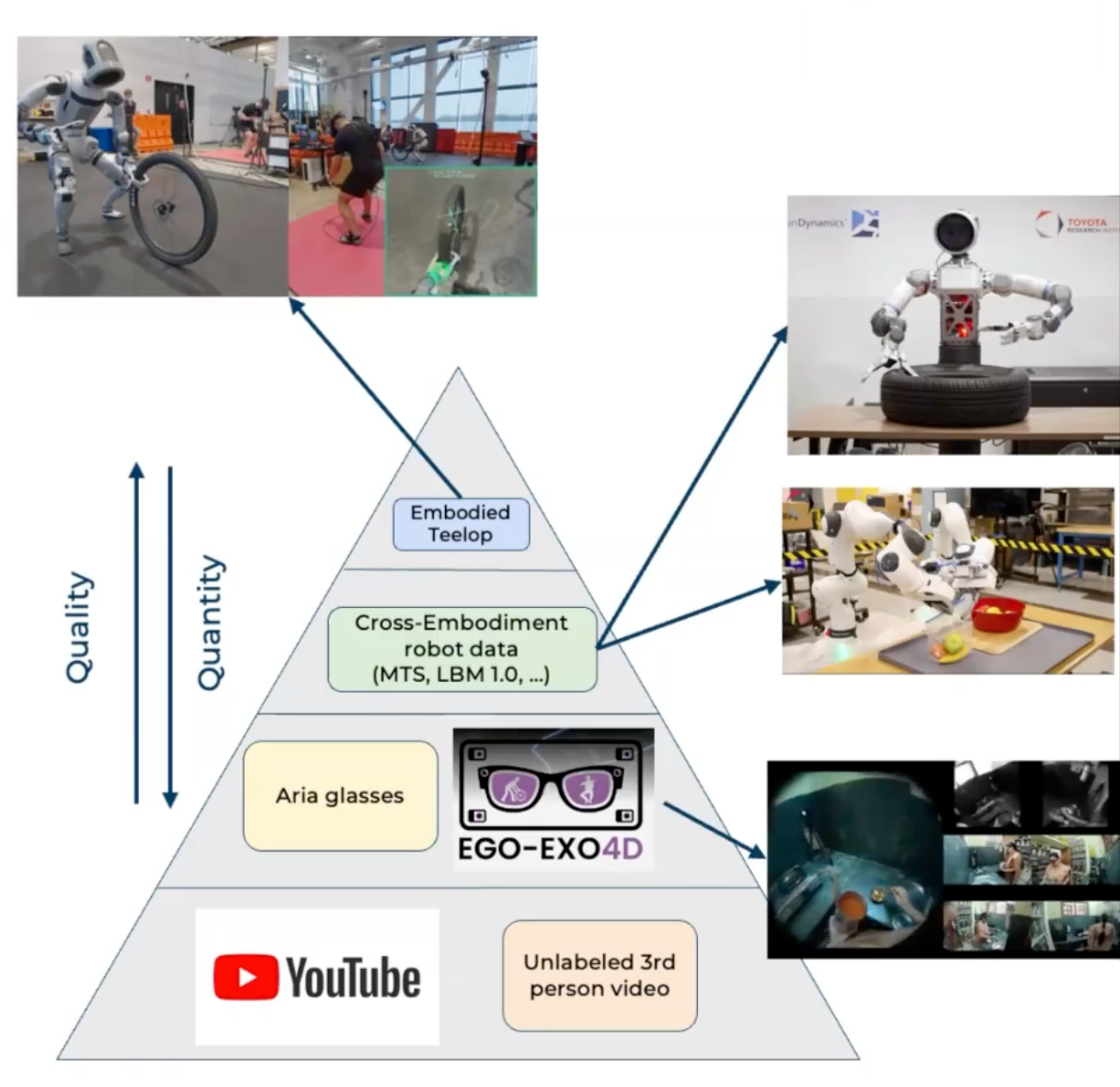

I'm a research scientist and technical lead on the Atlas VLA team. I currently focus on scaling humanoid policies through alternative data sources like egocentric human data (more details soon). My work is often split between ML training, robot deployment, and coordinating data collection efforts. Previously, I was responsible for vision and wholebody manipulation that powered the Atlas sequencing demos.

Publications

NeuralFeels with neural fields: Visuo-tactile perception for in-hand manipulation

Sudharshan Suresh, Haozhi Qi, Tingfan Wu, Taosha Fan, Luis Pineda, Mike Lambeta, Jitendra Malik, Mrinal Kalakrishnan, Roberto Calandra, Michael Kaess, Joe Ortiz, Mustafa Mukadam

General In-Hand Object Rotation with Vision and Touch

Haozhi Qi, Brent Yi, Sudharshan Suresh, Mike Lambeta, Yi Ma, Roberto Calandra, Jitendra Malik

MidasTouch: Monte-Carlo inference over distributions across sliding touch

Sudharshan Suresh, Zilin Si, Stuart Anderson, Michael Kaess, Mustafa Mukadam

ShapeMap 3-D: Efficient shape mapping through dense touch and vision

Sudharshan Suresh, Zilin Si, Joshua Mangelson, Wenzhen Yuan, Michael Kaess

Tactile SLAM: Real-time inference of shape and pose from planar pushing

Sudharshan Suresh, Maria Bauza, Peter Yu, Joshua Mangelson, Alberto Rodriguez, Michael Kaess

Active SLAM using 3D submap saliency for underwater volumetric exploration

Sudharshan Suresh, Paloma Sodhi, Joshua Mangelson...

IEEE Intl. Conf. on Robotics and Automation, ICRA, 2020